Table of Contents

FAQ - Frequently Asked Questions

What to do if any problem arises?

You can contact the technical support of the CESNET Storage Department via email:

support(at)cesnet.cz

General questions

Forgotten password for access to data storage

Problem description I have forgotten my password which I was using to access my data storage at CESNET (password set up via VO application form).

Solution: Password can be set by using Perun system. By using this form you will change the password for most of virtual organizations in the e-infrastructure e.g. MetaCentrum password as well. To access this form, you have to use eduID.cz federation.

If you are applying for VO storage and registration process didn't require setting your password, you have probably an existing account in MetaCentrum. If you have forgotten your primary password, you can change it via link above.

Connection timeout

Problem description Why does my connection timeout?

Solution: CESNET Data storage are using HSM (Hierarchical Storage Management) architecture. Within this HSM architecture can happen after some period of inactivity, that your data has been migrated to lower (slower) tiers. This leads to slower manipulation with your data, because before manipulation your data has to be migrated back to the higher (faster) tier. These delays can cause an timeout.

For successful download of your files, please set up the value of timeout for certain application. In some applications there may appear repeated request to enter your password. In this case please check the box with Remember my password.

Joining more identities into one

Problem description How to consolidate more identities into one?

Solution: In case you have more than one identity from the various organizations and you wish to use for access to user management system (Perun) any one of them, you need to consolidate all those identities. From the side of user management system (Perun) it will look like one identity no matter which one you have used for login.

Method how to consolidate two fictitious identities - 4352@cvut.cz a jura03@fel.cvut.cz is described below. In case you have more than two identities you can repeat this method according to your needs.

- Log in via you preferred identity

- Choose in the section Add new way of signing in… eduID.cz identity (or any other one in case of need)

- Log in via chosen identity

In case no problem has occurred, now you can use 4352@cvut.cz or jura03@fel.cvut.cz to access Perun system. From the system point of view it will look like one the same identity. In case of any difficulties please feel free to contact us at support(at)cesnet.cz.

Logout from federated web services

Problem description How can I log out from all federated services?

Solution: Logout from the application doesn't mean logout in context of eduID.cz federation. For certain time period it is possible to log in the application without requiring authentication (without using login and password). Therefore, if there are other people which can reach your computer, we strongly recommend to close your web browser. By closing the web browser, you can achieve logout from all applications you have visited by using federation eduID.cz.

List of all protocols and services

Problem description Are you getting lost in plenty of protocols and services? Aren't you sure which protocol or service is suitable for you?

Solution: Protocols and services overview with recommendations

Don't you know which address you should use for particular service?

Problem description Are you getting lost in plenty of protocols and services? Aren't you sure which address you should use for particular service?

Solution:

ownCloud

FileSender

Globus

Ostrava: ducesnet#du4

Jihlava: ducesnet#du5

FTP/FTPS

Ostrava ftp.du4.cesnet.cz

Jihlava ftp.du5.cesnet.cz

rsync, SCP/SFTP, SSH

Ostrava ssh.du4.cesnet.cz

Jihlava ssh.du5.cesnet.cz

Ostrava ssh6.du4.cesnet.cz

NFS

\ Ostrava nfs.du4.cesnet.cz

Jihlava nfs.du5.cesnet.cz

Ostrava nfs6.du4.cesnet.cz

Samba

Ostrava samba.du4.cesnet.cz

Jihlava samba.du5.cesnet.cz

Ostrava samba6.du4.cesnet.cz

Unknown directories in my home directory

Problem description When I have connected to my data storage, I see some directories, which I didn't create.

Solution: In your Home directory in data storage are some subdirectories, which represent migration policies. It is very important to become familiar with this directory structure before you start to upload any data.

The amount of stored data

Problem description How do I get information about my quota and occupied space?

Solution: To check your quota and the amount of stored data, you can use the Accounting service.

ownCloud

We do not recommend you to use Internet Explorer 11. JavaScript required for ownCloud web interface is not working properly.

Internet Explorer 6 is using vulnerable SSLv3. By the reason of keeping the security, our servers require TLSv1.0 and higher. Thus it is necessary to use web browser, which doesn't use SSL, but TLS.

I've just logged in and I can't see my files

Problem description: I have logged in via different identity provider compare to my first logging in. Now I don't see my files. Why?

Solution: We suppose, that you have used different identity for your current logging in than in the past. In case you have used more identities of different organizations, for each of your identity (past and current one) was created new ownCloud account. For this kind of issue is necessary to join (consolidate) both your identities into one. So afterward no matter which identity you will use you shall see still same files. To consolidate your identities please contact us at support(at)cesnet.cz

Repeating request for ownCloud client authorization

Problem description: There appear authorization request after every system startup, which keeps opening my web browser.

Solution: That issue is related to storing of the ownCloud access token. If ownCloud client starts prior to the subsystems containing stored access token, ownCloud client requires to re-authorize the access.

In case of Linux system it is necessary to use Keyring service provided form your environment (e.g. Keyring, MATE Keyring and so on). Although you use Keyring service, it is possible that ownCloud client starts prior to the subsystems. In case of Windows it is very similar bug. Re-authorization problem will be fixed with new version of ownCloud client.

After I uploaded my file I cannot see it

Problem description I was uploading file via web interface. After it finished I cannot see my file. Why?

Solution: Uploading of one file via web interface is possible maximally for 4 hours. If you will upload your file via ownCloud Desktop client this restriction is invalid. So to upload your files, please use Desktop Client, see the section Desktop client

Problems related to Desktop Client connection

1) Problem description My client cannot connect to the ownCloud server even I am using correct password.

1) Solution:

ownCloud version 10 and login procedures deployed in June 2018 need desktop client version at least 2.4.0.

Desktop client usually check automatically for new versions. So the Desktop client can be updated immediately after the new version release.

We recommend you to use for update: ownCloud webpage, where are available official Dekstop clients for various platform i.e. Windows, OS X and Linux. For Linux is also useful to install repository. Due to this repository you can install new versions in common way (apt-get update, yum update …).

Problems with file sharing

Problem description I am trying to share file with my colleague and I cannot find his/her username (email address).

Solution: Due to security reasons is unsuspecting prohibited. To share given file with any user, this user must be registered in the service https://owncloud.cesnet.cz/. Further you can use sharing by utilization of ownCloud contacts. Other possibility is to know full username of your colleague. The user name is not necessarily email address, which you are used to use. Your colleague can find the username in page “Personal” in ownCloud web interface (Please log in to the web interface → In right up corner click on your name → Personal).

Synchronization of the executable file

Problem description I have synced executable file from one computer to the second computer. However the file is not executable on the second computer.

Solution: ownCloud server is not supporting the transmission of the file permissions. During the file transmission from one client to the second client are the the file permissions lost. More info at: https://github.com/owncloud/core/issues/6983

Problem with synchronization of some directories

Problem description OwnCloud refuse to sync some folders. It says: “There are available new directories, which have not been synced because of their large size see: ownCloud/… (directory list).”

Solution: The solution is to open the list of folders and pick all the large folders. Then click on “Apply” on the bottom of the error message.

Problem with synchronization of the files with long name

Problem description OwnCloud refuse to upload the file with very long name. Synchronization end up in “Connection closed nebo Bad Request”.

Solution: Maximum length of the file name is 210 characters.

Issue to log in via webDAV protocol in Windows

Problem description After I put my credentials and hit Enter there is repeatedly appearing always the same dialog box for entering my credentials.

Solution: Please check the drives in My Computer although the credential dialog box appears. If the Network Drive is still not connected it is necessary to edit the Windows Registry Keys. You can continue with following guide.

Edit Windows Registry Key

Edit Windows Registry Key

1. Click Start, type regedit, and then press Enter.

2. Locate and then select the following registry subkey:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\WebClient\Parameters

3. On the Edit menu, point to New, and then click Multi-String Value.

4. Type AuthForwardServerList, and then press Enter.

5. On the Edit menu, click Modify.

6. In the Value data box, type the URL of the server that hosts the web share (https://owncloud.cesnet.cz/remote.php/webdav), and then click OK.

7. Exit Registry Editor.

Object storage S3

Multipart upload

Problem description: I cannot upload file exceeding 5 GB. I got the error “Your proposed upload exceeds the maximum allowed object size”.

Problem solution: For your upload, you have to utilize so-called multipart upload. Multipart upload allows you to upload one object as a set of smaller files. Once the upload is done it is represented again as one object. Multipart upload can enhance the throughput of your upload. The single object should not exceed 5GB. In most of the clients, you can set up the threshold for multipart upload. Typically is 100MB.

FTP

Keeping original time of modification

Problem description After I have uploaded data into my data storage, my data doesn't keep original modification time. Is it possible to solve it?

Solution: Unfortunately FTP protocol doesn't allow set up time of files (in its current specification). RFC 3659 defines the MDTM command that allows you to get time but not to change it. However some FTP servers and clients allow to change the time using this command. We use FTP server which support MDTM command. Unfortunately clients FileZilla, WinSCP or Total Commander this command don't support. If you want to use MDTM, you can use the command-line client lftp with the ftp:use-mdtm-overloaded option.

NFS

Attaching more volumes with krb5 identities

Problem description Can I attach more volumes with various krb5 identities?

Solution: Start from Kerberos 1.10 it is possible to use the folder to keep your identity tickets instead of the file itself, so you can have more active identities in the same time and you can switch between them. Usually there is created the file in /tmp/ (e.g. /tmp/krb5cc_0_dX1u6IH8jO). It is possible to change the path into the selected folder.

First of all we will prepare a folder, where we want to save identity tickets. We will create this folder in /run/, because this folder will be cleaned after reboot.

# mkdir -p /run/user/0/krb5cc # chmod og-rwx /run/user/0/krb5cc

We will set up into the variable KRB5CCNAME path to the given folder.

# export KRB5CCNAME=DIR:/run/user/0/krb5cc

Now our folder for saving identity tickets is prepared. We can start to initialize the identities.

# kinit user@DOMAIN1 # kinit user@EINFRA

To write out all available identity tickets we can use commands klist -A or klist -l.

# klist -A Ticket cache: DIR::/run/user/0/krb5cc/tkta0Hysv Default principal: user@DOMAIN1 Valid starting Expires Service principal 9.6.2015 13:54:44 9.6.2015 23:54:38 krbtgt/DOMAIN1@DOMAIN1 renew until 24/06/2015 13:54 Ticket cache: DIR::/run/user/0/krb5cc/tkt5PDllX Default principal: user@EINFRA Valid starting Expires Service principal 9.6.2015 13:54:22 9.6.2015 23:54:16 krbtgt/EINFRA@EINFRA renew until 16/06/2015 13:54 # klist -l Principal name Cache name -------------- ---------- user@DOMAIN1 DIR::/run/user/0/krb5cc/tkta0Hysv user@EINFRA DIR::/run/user/0/krb5cc/tkt5PDllX

We can see, which identity is active by using the command klist.

# klist Ticket cache: DIR::/run/user/0/krb5cc/tkta0Hysv Default principal: user@DOMAIN1 Valid starting Expires Service principal 9.6.2015 13:54:44 9.6.2015 23:54:38 krbtgt/DOMAIN1@DOMAIN1 renew until 24/06/2015 13:54

To switch the identity we can use command kswitch.

# kswitch -p user@EINFRA # klist Ticket cache: DIR::/run/user/0/krb5cc/tkt5PDllX Default principal: user@EINFRA Valid starting Expires Service principal 9.6.2015 13:54:22 9.6.2015 23:54:16 krbtgt/EINFRA@EINFRA renew until 16/06/2015 13:54

In case we want to attach two NFSv4 volumes with different identities, we can run the command mount with active first identity, then we can switch the identity via kswitch and attach second volume with active second identity.

# mount -o sec=krb5i,proto=tcp,port=2049,intr storage-jihlava1-cerit.metacentrum.cz:/ /mnt/storage-cerit # kswitch -p user@EINFRA # mount -o rw,nfsvers=4,hard,intr,sec=krb5i nfs.du4.cesnet.cz:~/ /mnt/storage-du4

Ostrava ssh.du4.cesnet.cz

Jihlava ssh.du5.cesnet.cz

Ostrava ssh6.du4.cesnet.cz

Issue with connecting via NFS to the new Data Storage

Problem description I tried to connect to the new Data Storage using NFS protocol, but it was not working.

Solution: In case of Ostrava Data Storage you have to insert the line into krb5.conf for [domain_realm] for Ostrava Data Storage, similarly for other new Data Storages.

.du4.cesnet.cz = EINFRA-SERVICES

Issue with connecting NFS via Kerberos ticket

Problem description: I tried to connect via NFS protocol, but I've got following error report.

mount.nfs: Network is unreachable

Solution: The issue is caused by failed startup rpc-gssd service, see the service status:

systemctl status rpc-gssd

Condition: start condition failed at Thu 2018-05-06 06:12:22 CET; 20s ago

└─ ConditionPathExists=/etc/krb5.keytab was not met

To resolve this issue, you can create empty file in the /etc folder via following command:

touch /etc/krb5.keytab

Now you can restart rpc-gssd service and remount your storage.

FileSender

Internet Explorer 6 is using vulnerable SSLv3. By the reason of keeping the security, our servers require TLSv1.0 and higher. Thus it is necessary to use web browser, which doesn't use SSL, but TLS.

Sending notification to multiple recipients

Problem description: How to send notification in FileSender to multiple recipients about storing a file?

Solution: Separate the email addresses of recipients by comma.

Sending multiple files

Problem description How to send multiple files?

Solution:

You can choose between two choices to achieve sending multiple files at once. One choice is to create an archive using WinZip, tar, etc.

The second choice is to use a testing version of FileSender (filesender2.cesnet.cz). Because this is the testing version we can't assure the behavior of this instance. If you find a bug or strange behavior, so contact us at support(at)cesnet.cz.

FileSender requirement for your browser

Problem description What are the FileSender requirements on your browser?

Solution: Your browser must support HTML5 or Adobe Flash technology (Using Adobe Flash you can upload file sizes only up to 2GB).

Error notification in FileSender

Problem description What to do if you receive following message in FileSender:

Solution:

We are sorry but if you want to use the FileSender service, your account must be verified. For more information how to verify your account, please visit https://eduid.cz.

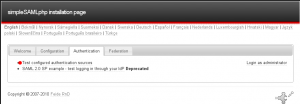

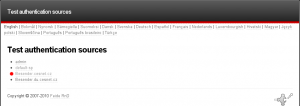

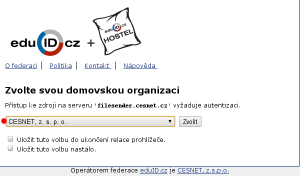

If you have account in the academic identity federation eduID.cz and the FileSender shows you still same message as is mentioned above, please visit this page https://filesender.cesnet.cz/simplesaml/module.php/core/frontpage_auth.php

then choose:

- Test configured authentication sources

then click on filesender.cesnet.cz

then pick your organization (institution)

now please send screenshot of the output screen to: support(at)cesnet.cz This page will look like fig. below.

Expiration time for data sent via FileSender

Problem description How long is the maximum file expiration period in FileSender?

Solution: Current version of FileSender allows to store data along one month period.

API and command line file upload

Description of the problem: Is it possible to upload files in a different way than via the web interface, i.e. directly from measuring or computing machines?

Solution: Yes, if the system has the ability to use the command line and python version 3, it is possible to use the offered script directly in the FileSender interface. You can find this script after logging in to FileSender under your user account (authentication via eduID) in the “My Profile” section and the “Python CLI Client” section, where you can download 2 files - the python script itself and the configuration containing the API key as well. which we upload to the path of our home directory ~/.filesender/filesender.py.ini . The script can be used without a configuration file, but at least the API-key and “User identification” (format abcdefghijklmnopqrstuvwxyz0123456789@einfra.cesnet.cz) must then be used as parameters on the command line. (this identifier can be found in the system Perun tab user - authentication - Login in EINFRAID-PERSISTENT)

Sample upload with downloaded and correctly placed configuration file:

python3 filesender.py -r abcdefghijklmnopqrstuvwxyz0123456789@einfra.cesnet.cz uploaded.file1 uploaded.file2

Sample upload using an API key:

python3 filesender.py -a 1234567890123456789012345678901234567890123456789012345678901234567890 -u abcdefghijklmnopqrstuvwxyz0123456789@einfra.cesnet.cz -r e-mail@recipient.com uploaded.file1 uploaded.file2

Globus

Globus and IPv6

Problem description Does Globus support IPv6?

Solution: It does, however the support of IPv6 is currently experimental. Due to this, we haven't configured IPv6 in Globus on our servers yet.

Handling archives and backups

Based on new Terms of Service that are in effect from January 2018, the system of handling data on storage facilities has been changed in order to prevent the facilities to get full. The data has been classified into two categories, permanent archives and backups.

The system is applied to storage facilities put into operation since 2018. It doesn't affect storage facilities du2, and du3 where all data is considered to be a permanent archive.

Archives and backups? What is it?

Problem description: What is archive and backups? What are handling differences in the storage facilities?

Solution: Data of archive type have permanent value. On the storage facility, it is limited by set quotas (limiting data size and number of files).

Backup spaces, on the other hand, serve to keep temporal data that doesn't have to be stored for more than months. Storing backups on the storage facility is limited by time: after one year, storage facility operators are entitled to delete the old data. The user is of course notified by e-mail in advance. This should suit majority of users who use the facility for backups. With reasonable strategy, there is no reason to keep older data for backups.

Storage facility operator typically provides backup space to the users. For permanent archival data, reasonable explanation and justification of resource usage is requested. Please note that misuse of archival spaces for backup data is considered a violation against the Terms of Service.

You can find detailed specification of both data types in section 3 of the Terms of Service.

How to recognise backup and archival folders on the storage facility

Problem description: How do I know whether my storage space is archival or backup one?

Solution: It is based on folder structure, please see the description of the folder structure.

What if I have a configured archival space and need a space for backups or vice versa?

Problem description: I have a backup space and I need to keep some archival data.

Solution: Kindly contact your Virtual organisation manager who'll arrange adding a necessary storage space with the storage facility operator.

Migration to new Data Center

I want to move my data, but I cannot log in to Data Storage.

Problem description: I got notification, that I should move my data, but I cannot log in to Data Storage.

Solution: That issue can be caused by two reasons. First is expired membership in particular virtual organization (VO) or expiration of data moving period.

In case of suspicion for VO membership expiration, please check your inbox and make sure, that you prolonged your membership in particular VO. If you didn't do so, you can easily submit prolongation request (link can be found in the email notification about membership prolongation). Your membership (access to the data storage) will be prolonged/activated until 60 min after request being approved by VO admin.

Did you exceed data moving period? Time slot for moving your data is two weeks (14 days) since you received first email notification. After that period is your access to the Data Storage restricted from the reason of moving other groups of users. In case you did not finish moving of your data, please contact Data Storage Administrators and they will provide you additional access to the Data Storage.

I want to move my data, but I got problem with permissions.

Problem description: I tried to transfer my data using Globus/rsync protocol, but I got problem with low permissions, see the log below:

Error (transfer)

Endpoint: ducesnet#globusonline (d8eb370a-6d04-11e5-ba46-22000b92c6ec)

Server: globus.du4.cesnet.cz:2811

File: /~/VO_storage-cache_tape/backups/tests/fLLgaxLv2R1/4Hcy5vd98A0

Command: RETR ~/VO_storage-cache_tape/backups/tests/fLLgaxLv2R1/4Hcy5vd98A0

Message: Fatal FTP response

---

Details: 500-Command failed. : globus_l_gfs_file_open failed.\r\n500-globus_xio: Unable to open file

/exports/home/username/VO_storage-cache_tape/VO_storage-cache_tape/backups/tests/fLLgaxLv2R1/4Hcy5vd98A0\r\n500-

globus_xio: System error in open: Permission denied\r\n500-globus_xio: A system call failed: Permission denied\r\n500 End.\r\n

Solution: That issue can be solved by checking and setting correct permissions on desired files/directories directly on the data storage. In case you use any system from Windows family, you can use this tutorial.

Please connect to the data storage using ssh protocol.

ssh username@ssh.du4.cesnet.cz

Now go to the directory, which you wish to check. Here is obvious which files are causing the issue. It is test1 a testfile1.

username@store:~>cd VO_storage-cache_tape username@store:~/VO_storage-cache_tape>ls -alh total 84K drwx-----T 4 username storage 82 May 3 09:57 . drwxr-x--- 1378 root storage 56K May 3 13:30 .. d--------- 2 username storage 10 May 3 09:56 test1 drwxr-xr-x 2 username storage 10 May 3 09:56 test2 ---------- 1 username storage 0 May 3 09:56 testfile1 -rw-r--r-- 1 username storage 0 May 3 09:56 testfile2

If you wish to look for problematic files/directories in the directory tree, you can use following command:

# find -L . -perm +u-rwx,g-rwx,o-rwx

Alternatively you can use find command and simultaneously correct missing permissions, see below:

# find -L . -perm +u-rwx,g-rwx,o-rwx -exec chmod u+rX '{}' \;

Object storage S3

CESNET S3 service utilizes the naming convention domain.cz/tenant:bucket. The tenant is the short VO's name and the domain is s3.clX.du.cesnet.cz. The name convention differs from the AWS S3 where is used formatting “bucket.domain.com”. If you will not explicitly mention the tenant it should be recognized automatically. The recognition is being performed based on the access key and secret key. So it should be sufficient to use the format as follows: s3.clX.du.cesnet.cz/bucket

In case your client considers the endpoint as native AWS you have to switch to S3 compatible endpoint. Most of the clients can automatically process both formattings. However, in some cases is necessary to specify the format explicitly.

cl1 - https://s3.cl1.du.cesnet.cz cl2 - https://s3.cl2.du.cesnet.cz cl3 - https://s3.cl3.du.cesnet.cz cl4 - https://s3.cl4.du.cesnet.cz cl5 - https://s3.cl5.du.cesnet.cz

Personal S3 account

A personal S3 account is an elementary S3 service. It is suited for your personal working/research data which you don't need to share between users/groups. To obtain a personal S3 account just follow this guide.

Linux clients

AWS-CLI

AWS CLI - Amazon Web Services Command Line Interface - is standardized too; supporting S3 interface. Using this tool you can handle your data and set up your S3 data storage. You can used the command line control or you can incorporate AWS CLI into your automated scripts. Tutorial for AWS CLI

s3cmd

S3cmd is a free command line tool to upload and download your data. You can also control the setup of your S3 storage via this tool. S3cmd is written in python. It goes about open-source project available under GNU Public License v2 (GPLv2) for personal either or commercial usage. s3cmd guide.

Rclone - data synchronization

The tool Rclone is suitable for data synchronization and data migration between more endpoints (even between different data storage providers). Rclone preserves the time stamps and checks the checksums. It is written in Go language. Rclone is available for multiple platforms (GNU/Linux, Windows, macOS, BSD and Solaris). In the following guide, we will demonstrate the usage in Linux and Windows systems. Rclone guide.

s5cmd for very fast transfers

In case you have a connection between 1-2Gbps and you wish to optimize the transfer throughput you can use s5cmd tool. S5cmd is available in the form of precompiled binaries for Windows, Linux and macOS. It is also available in form of source code or docker images. The final solution always depends on the system where you wish to use s5cmd. A complete overview can be found at Github project. The guide for s5cmd can be found here.

VEEAM

VEEAM is the tool for backup, recovery, replication, etc.

Windows clients

Below are listed several Windows clients with corresponding guides.

WinSCP

WinSCP WinSCP is the popular SFTP client and FTP client for Microsoft Windows! Transfer files between your local computer and remote servers using FTP, FTPS, SCP, SFTP, WebDAV or S3 file transfer protocols. The guide for WinSCP

CloudBerry Explorer for Amazon S3

CloudBerry Explorer is an intuitive file browser for your S3 storage. It has two windows so in one you can see the local disk and in the second you can see the remote S3 storage. Between these two windows, you can drag and drop your files. The guide for CloudBerry explorer.

S3 Browser

S3 Browser is a freeware tool for Windows to manage your S3 storage, upload and download data. The Guide for S3 Browser.

CyberDuck

CyberDuck is a multifunctional tool for various types of data storage (FTP, SFTP, WebDAV, OpenStack, OneDrive, Google Drive, Dropbox, etc.). Cyberduck provides only elementary functionalities, most of the advanced functions are paid. Guide for CyberDuck

Mountain Duck

Mountain Duck is an extension of Cyberduck to mount the drives into file browsers. So you can work with your remote data like with data on your local disk. Mountain Duck allows connecting the cloud data storage as the disk in Finder (macOS) or file browser (Windows).

Advanced S3 functionalities

Sharing S3 objects

S3 objects versioning

Under your S3 storage, you can keep more versions of your objects in one bucket. It allows you to recover the objects, which has been removed or overwritten. The guide for S3 object versioning

Sharing the objects within tenant

Frequently asked questions

Multipart upload

Problem description: I cannot upload file exceeding 5 GB. I got the error “Your proposed upload exceeds the maximum allowed object size”.

Problem solution: For your upload, you have to utilize so-called multipart upload. Multipart upload allows you to upload one object as a set of smaller files. Once the upload is done it is represented again as one object. Multipart upload can enhance the throughput of your upload. The single object should not exceed 5GB. In most of the clients, you can set up the threshold for multipart upload. Typically is 100MB.